With the prevalent use of Agile methodologies, organizations are grappling with the challenge of scaling development across numerous teams. This has led to the emergence of diverse scaling strategies, from complex ones such as “SAFe", to more simplified methods e.g., “LeSS", with some organizations devising their unique approaches. While there have been multiple studies exploring the organizational challenges associated with different scaling approaches, so far, no one has compared these strategies based on empirical data derived from a uniform measure. This makes it hard to draw robust conclusions about how different scaling approaches affect Agile team effectiveness. Thus, the objective of this study is to assess the effectiveness of Agile teams across various scaling approaches, including “SAFe", “LeSS", “Scrum of Scrums", and custom methods, as well as those not using scaling. This study focuses initially on responsiveness, stakeholder concern, continuous improvement, team autonomy, management approach, and overall team effectiveness, followed by an evaluation based on stakeholder satisfaction regarding value, responsiveness, and release frequency. To achieve this, we performed a comprehensive survey involving 15,078 members of 4,013 Agile teams to measure their effectiveness, combined with satisfaction surveys from 1,841 stakeholders of 529 of those teams. We conducted a series of inferential statistical analyses, including Analysis of Variance and multiple linear regression, to identify any significant differences, while controlling for team experience and organizational size. The findings of the study revealed some significant differences, but their magnitude and effect size were considered too negligible to have practical significance. In conclusion, the choice of Agile scaling strategy does not markedly influence team effectiveness, and organizations are advised to choose a method that best aligns with their previous experiences with Agile, organizational culture, and management style.

Avoid common mistakes on your manuscript.

Agile methodologies have revolutionized software development by championing iterative processes, adaptability, and a stakeholder-focused approach, ensuring the efficient delivery of high-quality products. This transformation is evident as many organizations now employ Agile processes in their operations. 80% of respondents in an annual industry survey reported using Agile as their predominant approach in 2022 VersionOne (2022). However, because this survey was drawn primarily from the Agile community, it is likely subject to selection bias. Another survey by KPMG in 2019 among top executives found that 81% reported Agile transformation initiatives in the past three years (De Koning and Koot 2019).

While in its initial adoption stage, Agile was largely confined to single teams (Strode et al. 2012), there was a notable absence of guidance on how to scale Agile practices across multiple teams. However, the success of Agile at the team level has not only expanded its application beyond the realm of software development but has also pushed its implementation in large-scale settings (Dingsøyr et al. 2018).

In recent years, Agile Scaling frameworks have become increasingly popular to address this gap (Mishra and Mishra 2011), including the Scaled Agile Framework (“SAFe”) Inc (2018), Large-Scale Scrum (“LeSS”) LeSS Framework (2023), “Nexus” Nexus (2023), Scrum@Scale and “Scrum of Scrums” Sutherland (2001); Schwaber (2004). “SAFe” has become the most popular with 53% of organizations opting for it VersionOne (2022), followed by 28% Scrum@Scale (often referred to as “Scrum of Scrums”). “LeSS” is adopted by 6% of organizations and Nexus by 3%. “SAFe” has also been identified as the most popular in scholarly investigations (Alqudah and Razali 2016; Putta et al. 2018; Conboy and Carroll 2019). However, many organizations also develop their own approaches to scale Agile development across many teams (Edison et al. 2022). These results show that organizations pick different solutions to scale, without a universally agreed best practice for software teams.

Among the myriad approaches, “SAFe” is often viewed as the most complex (Ebert and Paasivaara 2017). Some anecdotal evidence suggests it is not well-received within the professional Agile community. For example, a non-academic poll among 505 professionals by a popular industry blog found that a notable number of participants were unlikely to recommend “SAFe” (Wolpers 2023). Furthermore, we report a dedicated website that collects criticisms of “SAFe” from Agile experts as well as case studies (Hinshelwood 2023).

Nevertheless, the interest in Agile scaling approaches has sparked an interest of practitioners and researchers alike. In particular, 136 articles have been published in 46 venues by more than 200 authors between 2009 and 2019, accessible through IEEE Xplore, ACM Digital Library, Science Direct, Web of Science, and AIS eLibrary (Ömer Uludaǧ et al. 2022). The aim of this study is to investigate how scaling approaches like “SAFe”, “LeSS”, “Scrum of Scrums”, as well as custom approaches, impact the effectiveness of Agile teams and the satisfaction of their stakeholders. Stakeholder satisfaction has been proposed as a key indicator of success for Agile teams (Kupiainen et al. 2014; Mahnic and Vrana 2007). At the same time, job satisfaction and high team morale have been associated with team effectiveness (Verwijs and Russo 2023b; Kropp et al. 2020; Tripp et al. 2016). Henceforth, we frame our research question (RQ) as follows:

RQ: To what extent are the effectiveness of Agile teams and the satisfaction of their stakeholders influenced by the Agile scaling approach in use?

To answer our research question, we performed a cross-sectional study with 15,078 team members aggregated into 4,013 Agile teams. We compared their overall effectiveness and the quality of core processes of Agile teams as operationalized by Verwijs and Russo (2023b). Furthermore, we analyzed the evaluations of 1,746 stakeholders (e.g., users, customers, and internal stakeholders) for 544 of those teams. Analysis of Variance (ANOVA) and linear regression were used to identify significant differences between scaling approaches, with and without controlling for the experience of teams with Agile and the size of organizations.

Our research revealed that small, but statistically significant differences among scaling approaches. However, their effect sizes were too small to be practically relevant. In essence, the choice of scaling approach seems to have a negligible impact on team effectiveness and stakeholder satisfaction. Notably, among the control variables, a team’s experience with Agile emerged as a more influential factor.

In the remainder of this paper, we describe the related work in Section 2. We then discuss our research design in Section 3 and report the results of our analyses in Section 4. Finally, we discuss the implications for research and practice along with the study limitations in Section 5 and draw our conclusion by outlying future research directions in Section 6.

Organizations often engage in multi-year software engineering projects that involve the coordination of work done by many teams, either regionally, globally, or both (Ebert and Paasivaara 2017). With the rise of Agile methods and their collaborative, iterative, and human-oriented approach to software engineering, organizations are increasingly seeking ways to apply Agile principles at scale. Agile methods like Scrum and XP initially focused on intra-team collaboration and offered little guidance on how to apply it across many teams (Beck et al. 2001; Schwaber and Sutherland 2020). While this works well in small organizations or efforts that involve few teams, many challenges have been identified in the application to large-scale efforts (Maples 2009).

Empirical research on how companies can do large-scale transformations and processes has been scarce (Ebert and Paasivaara 2017), with some exceptions (Paasivaara et al. 2018; Russo 2021a). Several researchers have tried to define when a project is considered large-scale, and a taxonomy of the scale of Agile has been developed. Dingsøyr et al. (2014) state that the cost of a project is not a sufficient criterion for large-scale, as some projects might involve hardware procurement, which differs in price related to the specific country. The reliable factor in defining large-scale projects is the number of teams the practitioners are divided into, where 2-9 teams are considered large-scale, and over ten teams are considered very large-scale (Dingsøyr et al. 2014). Specifically, Dikert et al. (2016) define large-scale as “software development organizations with 50 or more people or at least six teams” with the assumption of an average team size of six to seven members.

Next, we discuss several approaches to scale Agile projects. We first discuss “Scale Agile Framework (SAFe)” and “Scrum of Scrums and Scrum@Scale” as the two most popular approaches, with market shares of respectively 53% and 28% VersionOne (2022). We then turn to “Large-Scale Scrum (LeSS)” as an example of a lightweight approach compared to “SAFe”, with an approximate market share of 6% VersionOne (2022). We also briefly discuss other approaches, although they are not the focus for the subsequent investigation. A comparison of various scaling approaches is included in Section 2.5.

The most popular Agile scaling approach to date is the Scaled Agile Framework (“SAFe”) with an approximate market share of 53%, according to a recent industry survey (VersionOne 2022). It aims to enable large-scale software and product development by applying Agile principles at the enterprise level (Inc 2018). Developed by Dean Leffingwell, the framework combines elements of Agile, Scrum, Lean, and related methodologies and is organized into three levels - Team, Program, and Portfolio. “SAFe” emphasizes continuous improvement, collaboration, and alignment between teams and offers a range of tools and practices, including Agile Release Trains (ARTs), PI planning, and DevOps, to support these objectives. The framework is designed to help organizations achieve faster time-to-market, higher quality, and greater efficiency in their software and systems development efforts (Alqudah and Razali 2016; Inc 2018). However, some practitioners perceive “SAFe” as complex due to its attempt to incorporate all best practices and its failure to explain how to scale down (Hinshelwood 2023). SAFe includes many role definitions, which make managers feel comfortable, and uses Scrum practices at the team level, with the opportunity to use Kanban, while applying specific roles such as product manager, system architect, and deployment team (Ebert and Paasivaara 2017; Inc 2018)

Ciancarini et al. (2022) conducted a multivocal literature review that focused on the challenges in the adoption of “SAFe”. The study covers three main research areas; to identify the success factors, to uncover implementation issues, and to discover the effects. The success factors for “SAFe” include Leadership Support in transformation, Communication between Layers, Support from Teammates, and Trust between Teams. Therefore it is beneficial for top management to both support and understand “SAFe”, along with a shared commitment to adopt “SAFe” in all parts of the organization for the framework to succeed. Ciancarini et al. also performed interviews with 25 respondents from 17 organizations to gain a deeper understanding of the challenges. They found that practitioners initially experience “SAFe” as very complex and overwhelming. However, the approach becomes more effective after the initial stage. According to the study, the most significant reported benefits of “SAFe” relate to better company management, such as increased productivity, shared vision, and coordination of work. The most commonly identified challenges are that “SAFe” requires a major commitment on all levels of the organization, resources in the form of time, and that “SAFe” may inhibit Agility when improperly practiced and misunderstood by management (Ciancarini et al. 2022).

“Scrum of Scrums” is one of the earliest approaches to scale Agile development across multiple teams (Sutherland 2001; Schwaber 2004). It follows “SAFe” with an approximate market share of 28% VersionOne (2022). This approach is more aptly described as a practice that is applied on top of the Scrum framework than a full framework in its own right (Kalenda et al. 2018). It builds on the “Daily Scrum” that is held every 24 hours by each Scrum team to coordinate work between its members and is timeboxed to 15 minutes. Each team then sends one member to a “Daily Scrum” that is held every 24 hours to coordinate work across teams and manage dependencies (Schwaber 2004). Although the “Scrum of Scrums” is recommended for settings with up to 10 teams, multiple levels of “Scrum of Scrums” can accommodate larger scales (Sutherland 2001). The “Scrum of Scrums” is the core practice of the Scrum@Scale-framework (Scrum@Scale 2023), although it adds Scaled Retrospectives, a Scrum Master for the facilitation of the “Scrum of Scrums” and a Scrum team to remove impediments called “Executive Action Team”. Like “LeSS”, “Scrum@Scale” is more lightweight than “SAFe” Almeida and Espinheira (2021). Moreover, “Scrum@Scale” emerges as one of the most flexible scaling approaches in the comparative review by Almeida and Espinheira (2021) although the authors conclude that it offers little guidance for continuous improvement, shared learning and how to deal with complex products.

Large-Scale Scrum (“LeSS”) was developed by Larma & Vodde LeSS Framework (2023). It aims to scale Scrum, lean, and Agile development principles to large product groups. “LeSS” remains conceptually close to the Scrum Framework and is more lightweight than “SAFe” Kalenda et al. (2018). In “LeSS”, all Scrum Teams start and end their Sprints at the same time and deliver one potentially shippable increment together in that time. Each Sprint begins with a shared Sprint Planning and ends with a shared Sprint Review and Sprint Retrospective. Work is pulled from a shared Product Backlog and is managed by a single Product Owner. While “LeSS” is recommended for up to 8 Scrum teams, multiple “LeSS” frameworks can be stacked to accommodate larger numbers in “LeSS Huge”. Paasivaara and Lassenius (2016) concluded from a case study that “LeSS (Huge)” seems most suited for products that can be broken down into relatively independent requirement areas. Otherwise, the area-specific meetings suggested by the framework for retrospectives, sprint planning, and sprint reviews become cumbersome. Almeida and Espinheira (2021) note in a comparative review that “LeSS” may be more difficult to adopt because it provides less detailed guidance as compared to “SAFe” or “Scrum@Scale”, but that “LeSS” more purposefully embeds continuous improvement and shared learning in its approach.

More approaches have been developed to scale Agile development across multiple teams. We describe a selection below to illustrate the broadness of the landscape.

“Disciplined Agile” (DA) was developed by Ambler and Lines (2020). It provides a more comprehensive multi-phased process model for scaled Agile delivery that also includes expert roles for technical architecture, testing, domain expertise, and integration. “Nexus” is another lightweight scaling approach developed by Bittner et al. (2017) that remains conceptually close to the Scrum framework. It introduces scaled versions of the Sprint Planning, Sprint Review, and Sprint Retrospectives that are held by up to 9 teams. A “Nexus Integration Team” is introduced to coordinate the integration of work between teams and provide training, support, and coaching. Another perspective on scaling was provided by Henrik Kniberg in the “Spotify Model” Kniberg and Ivarsson (2014). It is not a framework but rather describes how Spotify organized the scaling of its development and its culture across many teams in the early 2010s. The last specific approach we will discuss here is “Recipe for Agile Governance” (RAGE) by Thompson (2013). It provides a set of practices and roles drawn from Scrum and Lean to provide guidance at the project-, program- and portfolio levels in large enterprises.

Finally, Conboy and Carroll (2019) observe that predominant corporations such as Dell, Accenture, and Intel frequently formulate their unique scaling strategies. This customization is aimed at ensuring a more harmonious integration with the prevailing organizational culture and structures and to more effectively comply with regulatory mandates (Kostić et al. 2017). This trend is not exclusive to these entities; mission-critical organizations, which are often subject to stringent security prerequisites, also exhibit a preference for tailored development to meet their specific needs (Messina et al. 2016; Ciancarini et al. 2018; Russo et al. 2018).

We now turn to a comparison of the various Agile scaling approaches.

Almeida and Espinheira (2021) studied the performance of six large-scale Agile frameworks on 15 assessment criteria, including the level of control, customer involvement, and technical complexity. Their review included “Disciplined Agile”, “LeSS”, “Nexus”, “SAFe”, “Scrum@Scale”, and Spotify’s Agile Scaling Model. None performed better on all dimensions. The authors argue that the optimal approach for organizations is to adopt the framework most similar to their current mindset (Almeida and Espinheira 2021).

A systematic literature review by Edison et al. (2022) also identified challenges common to “SAFe”, “Scrum@Scale”, “Disciplined Agile”, “Spotify’s Agile Scaling Model”, and “LeSS”. They collected 191 studies across 134 different organizations that considered one or more of these approaches in primary studies published between 2003 and 2019. The authors identified 31 challenges grouped into nine distinct areas when scaling Agile: inter-team coordination, customer collaboration, architecture, organizational structure, method adoption, change management, team design, and project management. Based on 191 studies they reviewed, they conclude that none of these challenges are unique to specific large-scale methodologies. According to these authors, opting for a custom approach may lead to slightly more challenges (Edison et al. 2022). Similarly, Kalenda et al. (2018) identified 8 scaling practices that are commonly used by various scaling approaches, such as a scaled sprint review, scaled retrospectives, communities of practice (CoP), and the use of cross-skilled feature teams. They also identified 9 challenges to agile scaling that are independent of the approach used, such as too much workload, resistance to change, lack of teamwork, lack of training, and quality assurance issues. Finally, Santos and de Carvalho (2022) identified requirements management as a core challenge for scaling approaches in general. While these studies aimed to identify adoption patterns and not compare the different methodologies, the findings support those of Almeida & Espinheira by underlining the importance of context when evaluating the effectiveness of Agile frameworks (Almeida and Espinheira 2021; Edison et al. 2022). A framework that performs optimally in one setting can perform ineffectively in another.

The pattern that emerges from the literature is that one scaling approach is not clearly better than the others. Although there seems to be a preference for simpler approaches by practitioners, lightweight approaches like “LeSS”, “Scrum of Scrums” or “Nexus” do not appear to be categorically better than more complex approaches like “SAFe” or “Disciplined Agile”. Instead, contextual variables seem to be more decisive in determining what is best for an organization. However, the aforementioned studies aimed to identify challenges and success factors across primary studies (e.g., case studies) of scaling approaches as implemented in case organizations. The qualitative nature of such data does not allow statistical generalization nor does it provide a comparison on equal grounds. To date, no empirical study has been performed that directly compares scaling approaches quantitatively on key metrics (Ebert and Paasivaara 2017). This study attempts to address that gap. Such a study provides empirical support for the patterns identified in the aforementioned investigations. Moreover, it brings clarity to how various scaling approaches perform, highlights potential variables that influence that performance, and offers evidence-based recommendations to the ongoing debate among practitioners (Wolpers 2023; Hinshelwood 2023).

A key challenge that scaling approaches address is how to scale the work from one Agile team to many Agile teams. Thus, the effectiveness of those teams is a useful key metric to compare approaches on. This is discussed next.

Team effectiveness is defined by Hackman (1976) as “the degree to which a team meets the expectations of the quality of the outcome” (Hackman 1976). This is conceptually different from team performance or developer productivity (e.g., lines of code, merge times, velocity). While such measures are useful, what constitutes a high or a low result is highly contextualized and therefore difficult to compare between organizations (Mathieu et al. 2008). In other words, team effectiveness serves as a more comprehensive measure of productivity, focusing on stakeholder and team member satisfaction rather than context-specific quantitative metrics. Indeed, team effectiveness is typically operationalized as a composite of the satisfaction of stakeholders with team outcomes (e.g. customers, users) and the satisfaction of team members with the work needed to deliver those outcomes (Wageman et al. 2005). While such measures are more subjective than productivity metrics, they are also easier to compare across organizations (Doolen et al. 2003; Purvanova and Kenda 2021; Kline and MacLeod 1997). Indeed, the direct measurement of stakeholder satisfaction has been proposed as a comparative key metric for Agile teams (Mahnic and Vrana 2007; Kupiainen et al. 2014).

In the context of Agile teams, job satisfaction has been found to correlate positively with Agile practices and the ability to achieve business impact with one’s work (Kropp et al. 2020; Tripp et al. 2016; Keeling et al. 2015). Another perspective is provided by Verwijs and Russo (2023b). They induced team effectiveness as a composite of stakeholder satisfaction and team morale from 13 case studies. Team morale is conceptually similar to job satisfaction, but draws from positive psychology to emphasize the motivational quality of doing work as part of a team (Kahn 1990; Mahnic and Vrana 2007). Additionally, their study identified five factors that determine team effectiveness and validated a questionnaire to assess it. The model showed excellent fit based on data from 1,978 Agile teams.

The first factor is Responsiveness. It reflects the ability of teams to respond quickly to emerging needs and requirements by stakeholders. Its lower-order processes are release frequency, release automation, and refinement. Stakeholder Concern captures to which extent teams understand what is important to their stakeholders and work to clarify it. Its lower-order processes include stakeholder collaboration, shared goals, sprint review quality, and value focus. The third factor is Continuous Improvement and captures the degree to which teams engage in a process of continuous improvement and feel the safety to do so. It is composed of the lower-order processes of psychological safety, concern for quality, shared learning, metric usage, and learning environment. Team autonomy is the fourth factor and reflects the latitude of teams to manage their own work. It is composed of the lower-order processes of self-management and cross-functionality. The fifth factor represents management support.

Together, this operationalization of team effectiveness and the five core factors that give rise to it provide a strong foundation for the comparison of Agile scaling approaches.

To address our research question, we conducted a comprehensive survey targeting both teams and their associated stakeholders. We employed Analysis of Variance (ANOVA) Hair Jr et al. (2019) and multiple linear regression (Hair Jr et al. 2019) to compare the results between different scaling frameworks. This section discusses the research hypotheses Section 3.1, the sample (Section 3.2), measurement instruments (Section 3.3), and method of analysis (Section 3.4).

This study contributes to existing research by being the first to use a quantitative approach to empirically compare the results on a consistent set of measures across different scaling approaches. We will do so through the lens of “Team Effectiveness”, a composite of stakeholder satisfaction and team morale, and five team-levels factors that shape it according to Verwijs and Russo (2023b) and described in Section 2.

This study primarily investigates one scaling approach of higher complexity (“SAFe”), and two approaches of lower complexity (“LeSS” and “Scrum of Scrums”). A separate category is custom approaches to scaling that are developed by organizations internally. Consistent with the pattern from other comparative studies, we do not expect to find substantial differences between scaling approaches on team effectiveness and the five core processes that contribute to it. Thus, we hypothesize:

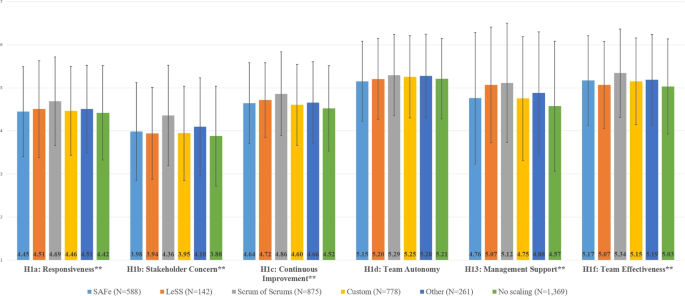

Hypothesis 1 (H1). Between scaling approaches, Agile teams are similar in terms of their responsiveness (H1a), concern for stakeholders (H1b), their ability to improve continuously (H1c), autonomy (H1d), management support (H1e), and their overall effectiveness (H1f).

One limitation of the study by Verwijs and Russo (2023b) is that it measured stakeholder satisfaction indirectly through the perception of team members. Such measures are susceptible to a “halo effect” (Mathieu et al. 2008) where teams that feel they are doing well may inflate their perceived satisfaction of stakeholders. To address this, we aim to directly measure the satisfaction of the stakeholders of teams with the responsiveness, release frequency, and quality of what is delivered by teams. Similarly to H1, we do not expect substantial differences in stakeholder satisfaction based on the scaling approach alone:

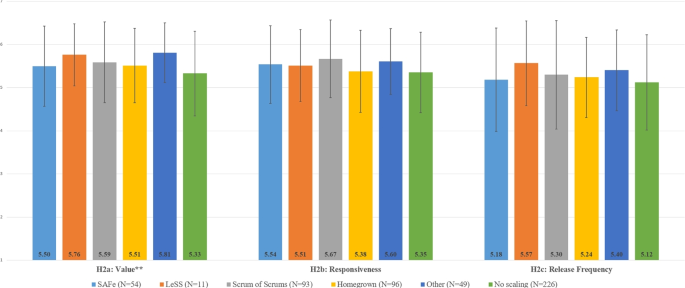

Hypothesis 2 (H2). Between scaling approaches, the satisfaction of stakeholders is similar for quality (H2a), responsiveness (H2b), and value (H2c).

This study includes two control variables to account for alternative explanations. The first control variable concerns the experience that teams have with Agile. Since lightweight approaches prescribe less than more complex ones, it is reasonable to assume that teams that are less experienced with Agile may struggle more with lightweight frameworks whereas the reverse may be true for very experienced teams with highly prescriptive frameworks. Thus, we will control for the experience that teams have with Agile when comparing scaling approaches.

The second control variable concerns the size of the organization a team is part of. Large organizations may be more inclined to opt for enterprise-oriented frameworks like “SAFe” or a custom approach, whereas smaller organizations may prefer the simplicity of “LeSS” or “Scrum of Scrums”. Since the size of an organization itself may influence the effectiveness of teams, we will control for it in this study.

Data collection was performed between September 2021 and September 2023 through a public online survey Footnote 1 . The survey was promoted through social media such as Twitter, LinkedIn and Medium, various meetups in the Agile community, at professional conferences, and through a series of blog-posts and podcasts created by the first author. 15,078 members of 4,013 Agile teams participated in the survey, as well as 1,841 stakeholders of 529 of those teams. Note that the group of stakeholders consisted of people external to the team, such as clients, customers, and users, and not team members. Due to the public nature of the survey, we were unable to calculate a response rate.

Public surveys are susceptible to response bias due to the self-selection of participants (Meade and Craig 2012). We employed several strategies outlined in the literature to reduce this threat to the validity of this study (Meade and Craig 2012). First, we ensured that team members and stakeholders could participate anonymously and emphasized this anonymity in our communication. Second, we encouraged honest answers by providing teams with a detailed team-level report and relevant feedback for their team upon completion. Third, to ensure a higher response rate from stakeholders, we provided teams with a mechanism to invite stakeholders themselves by sharing a link to a standardized questionnaire for stakeholders. Fourth, we removed all survey participants with a completion time below the 5% percentile of the completion times for their segment (team members or stakeholders) as well as all participants that entered very few questions (less than ten for team members, and also less than ten for stakeholders). Participants demographics, such as age, experience, business domains and roles in our sample is shown in Table 1.

We performed regression analysis to predict the responsiveness of teams based on the scaling approach in use and while controlling for the experience of teams with Agile and organization size (see Table 6). The regression model with controls was significant, \(F(7,3788) = 293.876, p < .01\) , and explained 35.2% of the observed variance in responsiveness compared to 0.9% by a secondary regression without control variables Footnote 2 (see Table 22 in Appendix C). The overall effect size of the controlled model is qualified as large, \(f^2 = .543, 90\% CI [.520, .566]\) .

In the following subsections, we explore how the satisfaction of stakeholders with each area is influenced by the scaling approach with and without controlling for team experience and organization size.

Table 14 Coefficients, Standard Errors (SE), Beta’s, t-values, significance, explained variance ( \(R^2\) ) and effect size ( \(f^2\) ) with 90% confidence intervals for the chosen scaling approach, experience of teams with Agile, and the size of the organization on stakeholder satisfaction with value

Of the control variables, only the experience of teams with Agile has a significant effect that is qualified as small based on its size, \(beta = .284, p < .01, f^2 = .082, 90\% CI [.046, .118]\) . This means that experienced teams are a little more able to satisfy their stakeholders.

None of the scaling approaches significantly predict the satisfaction of responsiveness by stakeholders. This means that stakeholder satisfaction in this area can be high or low for teams, regardless of the chosen scaling approach.

Of the control variables, only the experience of teams with Agile has a significant effect that is qualified as small based on its size, \(beta = .213, p < .01, f^2 = .042, 90\% CI [.017, .072]\) . So experienced teams are a little more able to satisfy their stakeholders in this area, although this effect is small and determined more by other factors.

Table 15 Coefficients, Standard Errors (SE), Beta’s, t-values, significance, explained variance ( \(R^2\) ) and effect size ( \(f^2\) ) with 90% confidence intervals for the chosen scaling approach, experience of teams with Agile, and the size of the organization on stakeholder satisfaction with responsiveness

None of the scaling approaches significantly predict the satisfaction of release frequency by stakeholders. This means that stakeholder satisfaction in this area can be high or low for teams, regardless of the chosen scaling approach.

Of the control variables, only the experience of teams with Agile has a significant effect that is qualified as small based on its size, \(beta = .275, p < .01, f^2 = .077, 90\% CI [.042, .111]\) . So experienced teams are a little more able to satisfy their stakeholders in this area, although this effect is small and determined more by other factors.

Table 16 Coefficients, Standard Errors (SE), Beta’s, t-values, significance, explained variance ( \(R^2\) ) and effect size ( \(f^2\) ) with 90% confidence intervals for the chosen scaling approach, experience of teams with Agile, and the size of the organization on stakeholder satisfaction with release frequency

A summary of the betas (i.e., indicating the direction and strength of the relationships) and effect sizes ( \(f^2\) ) that were identified in the regression analyses in this section is provided in Table 17 along with their significance and effect size classifications (Cohen 2013). After controlling for team experience and organization size, we found one significant positive effect of moderate size from “Other approach” on the satisfaction of stakeholders with value. Another small effect was found for team experience on all indicators. While the scaling approach has some influence, it is very small and probably not practically relevant.

Table 17 Summary of beta’s and effect sizes ( \(f^2\) ) by dimensions of stakeholder satisfaction for scaling approaches and control variables along with significance and effect size classification

This study investigated to what extent the effectiveness of Agile teams is influenced by the Agile scaling approach in use. We begin with a summary of our results, first for team effectiveness and its core processes as reported by 12,534 team members from 4,013 teams, and then for the satisfaction with the outcomes of 529 of these teams as reported by 1,841 stakeholders.

First, for team effectiveness and the core processes that determine it Verwijs and Russo (2023b), we found small but significant differences between the scaling approaches for responsiveness, stakeholder concern, continuous improvement, team autonomy, management support, and overall team effectiveness. However, those effects mostly disappeared in the regression analyses that controlled for the experience of teams and organization size. While some significant effects were still observed, their standardized beta coefficients were very small and ranged between -.070 and .060. Moreover, their effect size ( \(f^2\) ) was so small as to be qualified as “none”. For example, the difference between a team that uses “SAFe” as their scaling approach and a team that does not use scaling is only -.042 for responsiveness, or -.020 for overall team effectiveness. Similarly, teams that use “Scrum of Scrums” as their scaling approach compared to teams that do not use scaling see an increase of .057 in stakeholder concern. While such differences are statistically significant, their effect is too small to be practically relevant. We conclude from these results that there is no meaningful effect of scaling approaches on the core processes of Agile team effectiveness (responsiveness, stakeholder concern, continuous improvement, team autonomy, management support, and overall effectiveness).

With respect to the satisfaction reported by stakeholders with team outcomes, a small but significant mean difference was found for delivered value, but not for responsiveness and release frequency. However, those effects mostly disappeared in the regression analyses that controlled for the experience of teams and organization size. The only exception was one significant effect we observed on the satisfaction of stakeholders with value delivered by teams that use a scaling approach other than the ones we analyzed specifically (“Other approach”). This standardized beta coefficient for this effect was .151 and its size was qualified as “moderate”. We conclude from these results that, overall, there is no meaningful effect of scaling approaches on the satisfaction of stakeholders with team outcomes (on value, responsiveness, and release frequency).

Of our control variables, the experience that teams have with Agile contributed most strongly with effect sizes ranging between moderate and large. Organization size showed some significant effects, but all were qualified as small based on their effect size. Finally, we observed that regression models that only considered the scaling approach tended to explain about 1-2% of the variance, whereas regression models that included the control variables accounted up 47.5% of the variance. This further underscores that scaling approaches alone explain very little variance in team effectiveness and stakeholder satisfaction and that context variables (such as experience of teams with Agile) explain much more variance. Thus we conclude that teams appear to be comparably effective under any scaling approach and their stakeholders are equally satisfied. Instead, the variance observed is attributable to other factors, one of which is the experiences of teams with Agile. Such team-level factors are clearly more relevant.

This study confirms the pattern that emerged from qualitative comparisons of scaling approaches based on case studies, namely that no approach is clearly superior. Moreover, lightweight approaches do not seem to outperform more expansive and prescriptive approaches, which is a prevalent belief among practitioners (Wolpers 2023; Hinshelwood 2023). We will now explore potential explanations for our findings.

First, it is possible that the correlation between what is expected by the various approaches and what actually goes on in and around teams is low. All scaling approaches presume to encourage behavior that is consistent with the Agile manifesto (Beck et al. 2001), such as frequent releases and close collaboration with stakeholders. One assumption behind the adoption of any scaling approach is that it will lead to such behavior. One example of this is the extent to which teams collaborate with stakeholders like users and customers. This involves such behaviors as asking clarifying questions to stakeholders, inviting them to provide feedback, and spending time with them to learn what is needed. Our measure for stakeholder concern specifically assessed the presence of such behaviors, with questions such as “People in this team closely collaborate with users, customers, and other stakeholders.” and “The Product Owner of this team uses the Sprint Review to collect feedback from stakeholders”. However, our results show that the presence of such behaviors varies within scaling approaches, but not substantially between scaling approaches. Furthermore, the authors have observed teams in settings with “LeSS”, “SAFe”, “Scrum of Scrums”, and even unscaled Scrum that were either not allowed to interact with stakeholders directly or applied the term “stakeholder” to internal roles, like a project manager or product owner, that did not interact with users or customers directly either.

Another example of this is responsiveness. Frequent delivery is indeed a critical success factor of Agile projects (Chow and Cao 2008; Verwijs and Russo 2023b). Our measure for responsiveness assessed this with questions like “The majority of the Sprints of this team result in an increment that can be delivered to stakeholders.” and “For this team, most of the Sprints result in an increment that can be released to users.”. While substantial variation was observed within scaling approaches, there was no substantial difference between scaling approaches. This may be an issue with language. Organizations may vary in what they define as a “delivery” and what is “frequent”. The authors have anecdotally observed cases where a release to a staging environment was labeled as a “release”, even though internal procedures and processes restricted the actual release to a production environment to once per year. The actual behavior here is not what is intended by the Agile manifesto (Beck et al. 2001), which aims to validate assumptions through frequent releases to users.

Taken together, this challenges the assumption that scaling approaches themselves change behavior in and around teams to be more consistent with the Agile manifesto. As the examples illustrate, each approach can be implemented in a manner that only results in superficial change, with different labels and different roles, but no deeper change of behavior to be more consistent with Agile development practices. Other factors seem more important in encouraging that behavior, such as experience with Agile. Indeed, Kropp et al. (2016) identify experience with Agile as a crucial factor from a survey study among IT professionals. Moreover, these and other authors have also drawn attention to other factors that strongly moderate team effectiveness in Agile environments, such as organizational culture (Kropp et al. 2016; Othman et al. 2016), support from top management (Van Waardenburg and Van Vliet 2013; de Souza Bermejo et al. 2014; Verwijs and Russo 2023b; Russo 2021b), teamwork (Moe et al. 2010), close collaboration with stakeholders (Hoda et al. 2011; Van Kelle et al. 2015; Verwijs and Russo 2023b) and high autonomy (Junker et al. 2021). Such factors that have emerged from empirical research appear more relevant to understanding what makes teams effective than precisely which approach is used to scale Agile.

A second explanation may lie in the extent to which Agile practices of a scaling approach are adopted. Organizations and teams may vary in the degree to which they follow what is prescribed in their scaling approach of choice. Larger differences may be observed when the analyses control for the degree to which all parts of the approach are practiced, and not a selection of them. However, approaches like “SAFe” and “LeSS” specifically state that they are modular and that organizations should select the elements that work for them (Inc 2018; LeSS Framework 2023) which makes it hard to define when its proposed set of practices is properly adopted.

It is possible that the number of teams moderates the association between team effectiveness and stakeholder satisfaction on the one hand and the scaling approach on the other. As the number of teams grows and coordination becomes more complicated, this may put more strain on the scaling approach (Ebert and Paasivaara 2017). Whereas a complex approach like “SAFe” may provide more structure and guidance to take this strain, simpler approaches like “LeSS” and “Scrum of Scrums” may provide less support. This seems particularly relevant for organizations with limited experience with Agile. Future investigations can control for both experience and the number of teams to account for test alternative explanations.

After this research journey, we recognize that instead of focusing on the implementation of specific aspects of a scaling framework, organizations should probably go back to the roots and focus on the principles of the Agile manifesto (Beck et al. 2001). We suggest evaluating if teams release frequently, use those releases to collect feedback from stakeholders, create recurring opportunities to identify improvements and are cross-functional and sufficiently autonomous. However, this is what four of the five factors of team effectiveness proposed by Verwijs and Russo (2023b) effectively measure. For example, teams only score high on “Responsiveness” if they release every iteration, invest time in refinement and automate their release procedures. Similarly, teams only score high on “Stakeholder Concern” if they use reviews to collect feedback from stakeholders, have an ordered product backlog and set valuable short- and long-term goals. Thus, this study effectively compared teams at varying levels of Agile adoption across scaling approaches and found no meaningful differences between scaling approaches alone. This further reinforces that the approach itself does not make the difference, but the degree to which organizations honor the principles of Agile software development does (i.e., collaborate closely with users, and release to them frequently).

We now turn to the implications of our study and what they mean in the day-to-day practice of professionals and organizations that attempt to scale Agile methodologies.

The first implication is that there is no such “best” scaling approach, especially if concerned with team effectiveness and stakeholder satisfaction. Any variation in these variables is attributable to other factors. This also means that teams can be similarly effective under any scaling approach, and have equally satisfied stakeholders. Thus, we recommend that practitioners prioritize those factors that have been empirically linked to team effectiveness and stakeholder satisfaction and worry less about which scaling approach to pick. This includes factors such as continuous improvement (Verwijs and Russo 2023b), psychological safety (Edmondson and Lie 2014), inter-team collaboration (Riedel 2021; Dingsøyr and Moe 2013), teamwork (Strode et al. 2022), team autonomy (Junker et al. 2021; Verwijs and Russo 2023b) and socio-technical skills of developers (Verwijs and Russo 2023b). Verwijs and Russo (2023b) were able to explain up to 75.6% of the variance of team effectiveness with team autonomy, a climate of continuous improvement, concern for stakeholders, responsiveness, and management support.

Second, we follow the conclusions of Almeida and Espinheira (2021) and recommend that practitioners pick the scaling approach that best suits the culture, structure, and experience of their organization. The comprehensiveness of “SAFe” may work better in highly regulated, corporate settings that have limited experience with Agile development, whereas the simplicity of “Scrum of Scrums” or “LeSS” may be more suited to organizations that are already familiar with it. Moreover, complex approaches to scaling like “SAFe” and “Disciplined Agile” provide more guidance for governance, release planning, budgeting, portfolio planning, and technical practices, whereas simpler approaches like “LeSS” and certainly “Scrum of Scrums” leave this open. To illustrate this, Ciancarini et al. (2022) conclude from a multivocal literature review and a survey among practitioners that the comprehensiveness and informed support of organizations by “SAFe” is the primary reason for its success. Organizations have to be cognizant of the gap between their current state and the desired state of Agility in such areas. If this gap is too large, a comprehensive approach may help organizations ease into it, whereas a simpler approach may leave so much open that it creates more confusion than clarity. Once organizations build experience with Agile development methodologies, they can transition into simpler approaches or develop their own. Thus, we propose that organizations select for goodness-of-fit instead of simplicity alone and periodically reflect on the extent to which their scaling approach allows or impedes teams to practice the principles of Agile (software) development (Beck et al. 2001)

Third, we recommend that practitioners monitor stakeholder satisfaction and team effectiveness regardless of their scaling approach. Our results show substantial variation in these areas within each scaling approach, though not between. We also recommend monitoring the extent to which the behaviors observed in and around teams reflect the principles of the Beck et al. (2001). This includes behaviors around stakeholder collaboration, collaborative goal-setting, frequent releases to production, expanding team autonomy, and continuously reflecting and improving the process by which teams deliver to stakeholders.

Fourth, our results do not support the anecdotal negative opinion of complex approaches like “SAFe” we observed among Agile practitioners (Wolpers 2023; Hinshelwood 2023). It is likely that practitioners use different criteria, such as simplicity, personal preferences, or favor approaches with lower prescriptiveness. However, Ciancarini et al. (2022) found that practitioners of “SAFe” do not consistently experience it as too complex, too rigid, inhibiting learning and improvement, or too hierarchical. Another possibility is that practitioners have a broader comparative experience with different approaches, whereas the subjects in our study - team members and stakeholders - generally have experience only with the approach in use in their organization. We can not rule out that stakeholders of teams that use “SAFe” would be more satisfied with the value delivered by teams, their responsiveness, and release frequency under a simpler approach like “LeSS” or “Scrum of Scrums”. Unfortunately, such a hypothesis is hard to test as few stakeholders are in a position to experience one scaling approach with a team and then another consecutively. We also note that we did not observe meaningful differences in the core processes of team effectiveness between scaling approaches. Since these indicators have been found to explain a substantial amount of the variance in the effectiveness of Agile teams (Verwijs and Russo 2023b), we believe it is more likely to expect comparable results.

Finally, the role of experiences with Agile emerged as a surprisingly strong predictor of team effectiveness and a moderate one for stakeholder satisfaction in this study. In contrast to the scaling approach, this factor does meaningfully and positively impact the extent to which teams engage with stakeholders, are responsive, engage in continuous improvement, capitalize on their autonomy, and experience more support from management. Experienced teams also tend to have more satisfied stakeholders. This may be closely related to what is called an “Agile mindset” (AM) by Eilers et al. (2022). They define it as consisting of an attitude towards learning, collaborative exchange, empowered self-guidance, and customer co-creation. Indeed, experienced teams in our study also show higher responsiveness, stakeholder concern, team autonomy, continuous improvement, management support, and overall team effectiveness. The presence of such a mindset in and around teams may be much more relevant to team and business outcomes, regardless of the scaling approach, as it allows teams to better deal with volatility, uncertainty, complexity, and ambiguity (VUCA) Eilers et al. (2022). The notion of an Agile mindset also provides a more fine-grained set of variables to investigate compared to the course-grained measure for Agile experience we used in this study. Thus, future studies can attempt to replicate our results with AM as a control variable. Finally, we can not conclusively establish causality from a cross-sectional study such as this one, but it does suggest that broadening that experience with Agile through training, coaching, and practice is an effective recommendation.

A summary of our core findings and implications are provided in Table 18.

Table 18 Summary of key findings & implicationsIn this section, we discuss the threats to the validity of our sample study. We published team-level data and syntax files to Zenodo for reproducibility Footnote 3 .

Internal validity refers to the confidence with which changes in the dependent variables can be attributed to the independent variables and not other uncontrolled factors (Cook et al. 1979). Several strategies were used to maximize internal validity. First, online questionnaires are prone to bias and self-selection as a result of their voluntary (non-probabilistic) nature. This was counteracted by embedding our questions in a tool that is regularly used by Agile software teams to self-diagnose their process and identify improvements. Team members were invited by people in their organization to participate. Teams invited their own stakeholders. Second, we thoroughly cleaned the dataset of careless responses to prevent them from influencing the results. Third, we did not inform the participants of our specific research questions to prevent them from answering in a socially desirable manner.

Despite our safeguards, there may still be confounding variables that we were unable to control for. This is particularly relevant to the operationalization of team effectiveness, which is based on self-reported scores on team morale and the perceived satisfaction of stakeholders. Mathieu et al. (2008) recognize that such affect-based measures may suffer from a “halo effect”. We addressed this issue by also performing a secondary analysis that relied on the satisfaction as reported by stakeholders themselves for those teams where such evaluations were available in the tool. A moderate correlation was found between the satisfaction of stakeholders as reported by team members and the satisfaction reported by stakeholders directly (between .346 and .424). While this provides some evidence of a halo effect, the measure used with stakeholders was more extensive and multi-dimensional whereas the measure used with team members only asked to what extent they believed their stakeholders to be satisfied.

Several confounding factors have been identified that we could not control for. The first is that there may be a selection bias in which stakeholders are invited. We cannot conclusively rule out that teams only invited stakeholders that they assumed would be satisfied and ignored those who would not be. Similarly, it is possible that only highly effective teams invited their stakeholders whereas less effective teams did not. However, a post-hoc test did not reveal a significant effect of team effectiveness on the number of stakeholders invited, \(F(1,422) = .155, p = .69\) .

Construct validity refers to the degree to which the measures used in a study measure their intended constructs (Cook et al. 1979). To measure the indicators of team effectiveness, we relied on an existing questionnaire that was developed and tested earlier in Verwijs and Russo (2023b). The questionnaire to evaluate the satisfaction of stakeholders was tested in a pilot study and improved before the primary study.

A confirmatory factor analysis (CFA) showed that all items were loaded primarily on their intended scales (see Table 21 in the Appendix). A heterotrait-monotrait analysis (HTMT) yielded no issues. This means that our measures are distinguishable from each other and that any overlap does not confound the results. The reliability for all measures exceeded the cutoff recommended in the literature ( \(CR>=.70\) Hair Jr et al. (2019)), except social desirability. Thus, we are confident that we reliably measured the intended constructs.

Conclusion validity assesses the extent to which the conclusions about the relationships between variables are reasonable based on the results (Cozby et al. 2012). Our sample was also large enough to identify medium effects ( \(f=.15\) ) with a statistical power of 96%. For the comparison of stakeholder satisfaction between scaling approaches, we do note that the group for LeSS contained only 7 teams, representing 44 stakeholders.

Finally, external validity concerns the extent to which the results actually represent the broader population (Goodwin and Goodwin 2016). First, we assess the ecological validity of our results to be high. Our questionnaire was integrated into a more general tool that Agile software teams use to improve their processes. Participants were invited by people in their organization, usually Scrum Masters. Thus, the data is more likely to reflect realistic teams than a stand-alone questionnaire or an experimental design.

We do not know how well our sample reflects the total population. However, our sample composition (Table 1) shows that a wide range of teams participated in the questionnaire, with different levels of experience from different parts of the world and different types of organizations. We also observed a broad range of scores on the various measures. This provides confidence that a wide range of teams participated. Furthermore, our sample size and the aggregation of individual-level responses to team-level aggregates reduce variability due to non-systematic individual bias.

This study focused on team-level effectiveness and team-level stakeholder satisfaction. Although this paper contributes to the understanding of organization-level outcomes, it would be meaningful for future studies to investigate how organizational outcomes vary by scaling approach (e.g., financial baseline, market share, revenue). Such investigations would contribute to a more comprehensive picture of how various scaling approaches affect organizations.

Future research can also investigate what happens when teams or organizations switch from one approach to another. How does such a change affect team-level effectiveness, stakeholder satisfaction, and organizational outcomes? That kind of longitudinal data would allow researchers to determine whether the level of reported satisfaction of stakeholders would be different if they had prior experience with other approaches.

Future research can offset the costs and benefits of the various scaling approaches. If the choice of scaling approach does not correlate with actual team effectiveness or stakeholder satisfaction to a meaningful degree, it would be economical to pick the option with the lowest implementation costs. This is one area where the approaches vary substantially. “SAFe” requires additional training and certification, along with organizational changes, whereas “Scrum of Scrums” requires neither.

Finally, we recognize in our discussion that organizations probably do well in selecting a scaling approach based on contingency factors instead of simplicity alone. Simple and lightweight approaches like “LeSS”, “Nexus” and particularly “Scrum of Scrums” may leave too much open for organizations with very little experience with Agile, leading to confusion and uncertainty. A more prescriptive and comprehensive approach like “SAFe”, “Disciplined Agile” may offer more guidance here. Future investigations can develop evidence-based models to help organizations determine their goodness-of-fit with various scaling approaches. For example, this could include factors such as organizational culture, prior experience with Agile, leadership styles, budget structure, planning cycles, and regulatory requirements. One such model is proposed by Laanti (2017). This model identifies five progressive levels of successful scaling of Agile methodologies. Each level addresses how work is scaled and what benefits organizations gain from scaling. For example, organizations on the first level have the basics in place, such as Product Backlog tool, a prioritized Backlog and apply a framework like Scrum. On the other hand, organizations at the highest level have developed their own approach to scaling and release new increments on a daily or even hourly basis. This agility is leveraged to expand into new markets, build new businesses and outperform competitors.

Agile scaling approaches have become increasingly popular as (software) projects become more complex (Mishra and Mishra 2011). Such approaches have been developed to address a perceived gap in Agile methodologies; namely how to scale Agile development from one team to many teams. Of these approaches, the Scaled Agile Framework (“SAFe”) is the most popular (Putta et al. 2018; Conboy and Carroll 2019) although it is also seen as the most complex one (Ebert and Paasivaara 2017). Other well-known scaling approaches are Large Scale Scrum (“LeSS”) and “Scrum of Scrums” (Schwaber 2004). But many organizations also develop their own Agile scaling approach. There is some anecdotal evidence that practitioners prefer simpler approaches over more complex ones such as SAFe (Wolpers 2023; Hinshelwood 2023).

Several studies have investigated the success factors and risks of the various scaling approaches, mostly based on qualitative methods e.g., interviews with practitioners or case studies. Each scaling approach has its own challenges, but no approach appears to be consistently better (Almeida and Espinheira 2021; Edison et al. 2022; Putta et al. 2018). To date, no studies have systematically compared Agile scaling approaches based on empirical data from a consistent measure. The aim of this study was to investigate if certain Agile scaling approaches are more effective than others. We conducted a survey study among 11,376 team members grouped into 4,013 Agile teams to assess their effectiveness and the five core processes that give rise to it. Furthermore, stakeholder satisfaction was reported by 1,841 stakeholders for 529 of these teams.

While our results yielded some statistically significant differences, both the absolute differences and their effect size were small to non-existent. This applied both to the five indicators of team effectiveness as well as four dimensions of stakeholder satisfaction as reported by stakeholders themselves. We found that any observed differences often diminished when we controlled for the experience of teams with Agile and, to a lesser extent, the size of organizations. Thus, we conclude that the scaling approach itself is not a meaningful predictor of team effectiveness and stakeholder satisfaction in a practical sense. Teams that use “SAFe” appear to be equally capable to be effective and satisfy stakeholders than teams that use “LeSS”, a custom approach, “Scrum of Scrums” or another scaling approach.

Our findings are consistent with prior investigations by Almeida and Espinheira (2021) and Edison et al. (2022). Without strong evidence that shows clear differences between scaling approaches, we feel that the evidence-based recommendation is for organizations to use the scaling approach that works for them and does not create too much of a mismatch between mindset, structure, and processes. Stakeholder satisfaction and team effectiveness can then be monitored to identify areas for improvement and provide training and coaching to expand the experience of teams with Agile methodologies.

To uphold the standards of data transparency and promote reproducible research, we present this comprehensive Data Availability Statement:

Datasets Supporting Manuscript Figures and Tables:

1. All datasets pivotal to the construction of figures and tables within this manuscript are made available for inspection and further research.

1. The primary data supporting our findings are hosted on the Zenodo repository under a CC-BY-NC-SA 4 license. The complete replication package is available under the title “Do Agile Scaling Approaches Make A Difference? An Empirical Comparison of Team Effectiveness Across Popular Scaling Approaches,” which can be accessed and cited as Verwijs and Russo (2023a).

Inclusive Data Declaration:

We confirm that all data generated or analyzed during this research are comprehensively included in the provided repository link.

We encourage fellow researchers and all interested parties to access, examine, and use the dataset, ensuring that they respect the stipulated license conditions. Our commitment to data transparency and reproducibility remains unwavering.

The GDPR-compliant survey has been designed so that teams can self-assess their Agile development process. It is available at the following URL: https://www.scrumteamsurvey.org

see Section 3.4 for the rationale behind the two analysesThe complete replication package is openly available under CC-BY-NC-SA 4.0 license on Zenodo, DOI: https://doi.org/10.5281/zenodo.8396487.

The authors would like to thank Rasmus Broholm for feedback on an earlier version of this manuscript.